Less is more? Facebook, as you certainly know by now, announced last week that it’s making changes to News Feed. Posts from friends and family are in; content from Pages (including publishers) is out. There are a lot of questions about what this means for publishers. There are also questions about what it means for the spread of fake news on the platform.

- “While it may cut down incidental exposure to misinformation, the changes could, in some cases, only harden filter bubbles with a steady stream of content from people with similar ideologies. Meanwhile, a retrenchment from News Feed into more walled-off Groups and communities could exacerbate exposure to misinformation.” (Charlie Warzel, BuzzFeed)

- “Facebook’s head of news partnerships, Campbell Brown, also wrote to some major publishers that the changes would cause people to see less content from ‘publishers, brands, and celebrities,’ but that ‘news stories shared between friends will not be impacted,’ which could suggest that fake news might get promoted over content directly from legitimate news outlets.” (Alex Kaplan, Media Matters)

- “The accounts Facebook suggests at the top of your feed won’t have necessarily gone through any sort of vetting process. This is bad news if your fake news radar is a little rusty and your aunt is prone to sharing links from questionable sources.” (Marissa Miller, Teen Vogue)

Facebook’s solution to Fake News is to publish less news unless your racist uncle shares news with you.

— Anthony De Rosa (@Anthony) January 12, 2018

Adam Mosseri, Facebook’s VP of News Feed, sort of responded to some of these concerns on Twitter.

There are definitely risks, including more uncivil conversations, less informative stories. We just need to work on each.

— Adam Mosseri (@mosseri) January 14, 2018

Given that the vast majority of interactions between people are on friends’ stories, which are much less often problematic than the equivalent on page posts, it seems likely that this will create more good than bad.

— Adam Mosseri (@mosseri) January 14, 2018

Meanwhile, The New York Times spoke with some of the publishers from the countries where Facebook has been experimenting with an “Explore” feed that shunted news into a separate section — experiments that aren’t identical to the new News Feed changes that Facebook is rolling out in the U.S., but are similar. In some of those countries, at least, publishers reported “unintended consequences” of the change. For instance:

In Slovakia, where right-wing nationalists took nearly 10 percent of Parliament in 2016, publishers said the changes had actually helped promote fake news. With official news organizations forced to spend money to place themselves in the News Feed, it is now up to users to share information.

“People usually don’t share boring news with boring facts,” said Filip Struhárik, the social media editor of Denník N, a Slovakian subscription news site that saw a 30 percent drop in Facebook engagement after the changes. Mr. Struharik, who has been cataloging the effects of Facebook Explore through a monthly tally, has noted a steady rise in engagement on sites that publish fake or sensationalist news.

And in Bolivia:

Bolivia has also seen an increase in fake news as the established news sites are tucked behind the Explore function.

During nationwide judicial elections in December, one post widely shared on Facebook claimed to be from an election official saying votes would be valid only if an X was marked next to the candidate’s name. Another post that day said government officials had put pens with erasable ink in the voting booths.

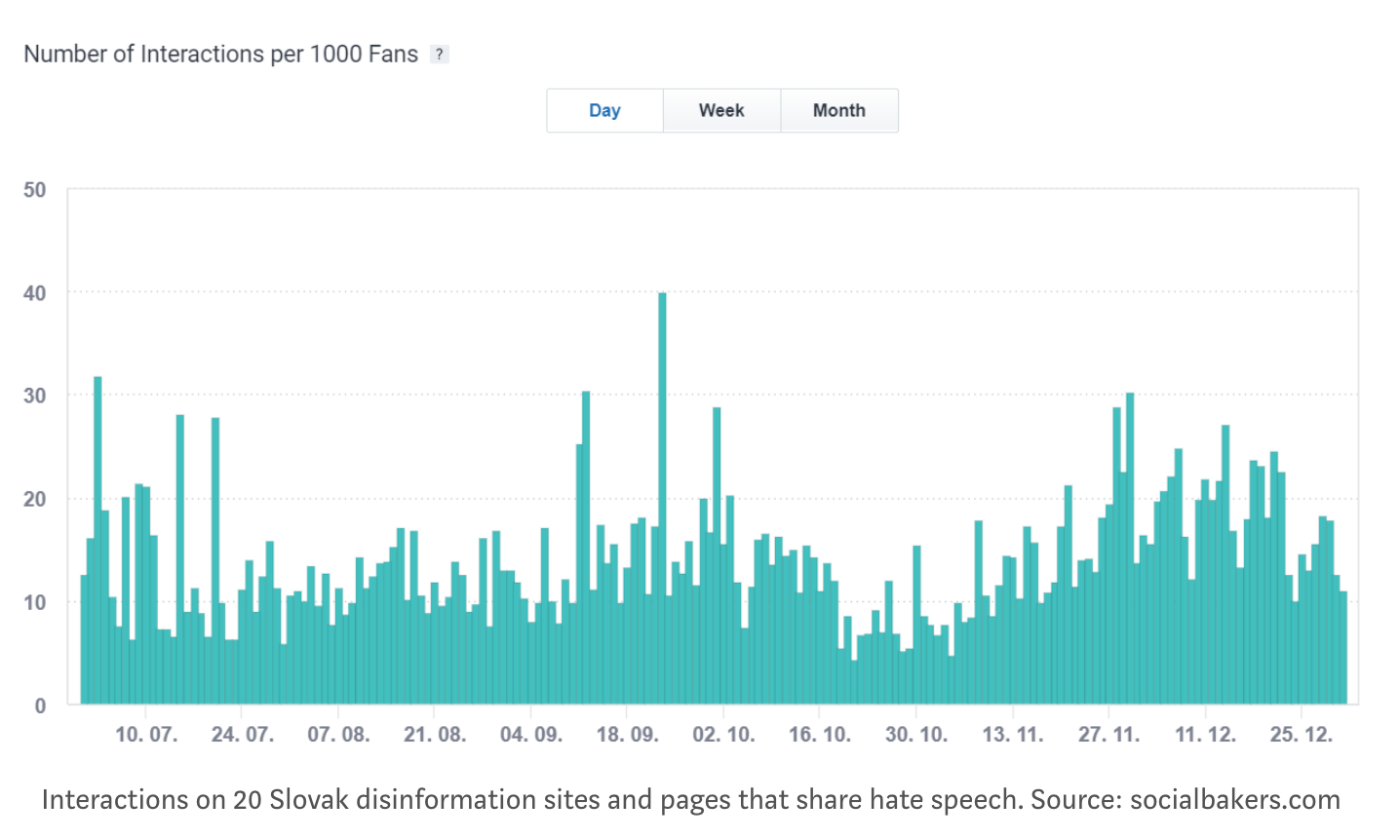

It’s hard to quantify this. In a post on Medium, Struhárik, the Slovakian social media editor mentioned above, shared a chart suggesting that, in Slovakia, “interactions on fake news pages haven’t fallen since [the] Explore Feed test started.”

The Explore test started October 19, which you can see as the point where traffic dips about two-thirds of the way into this chart. But the fake news sites recover quickly and end up with higher engagement than before the change.

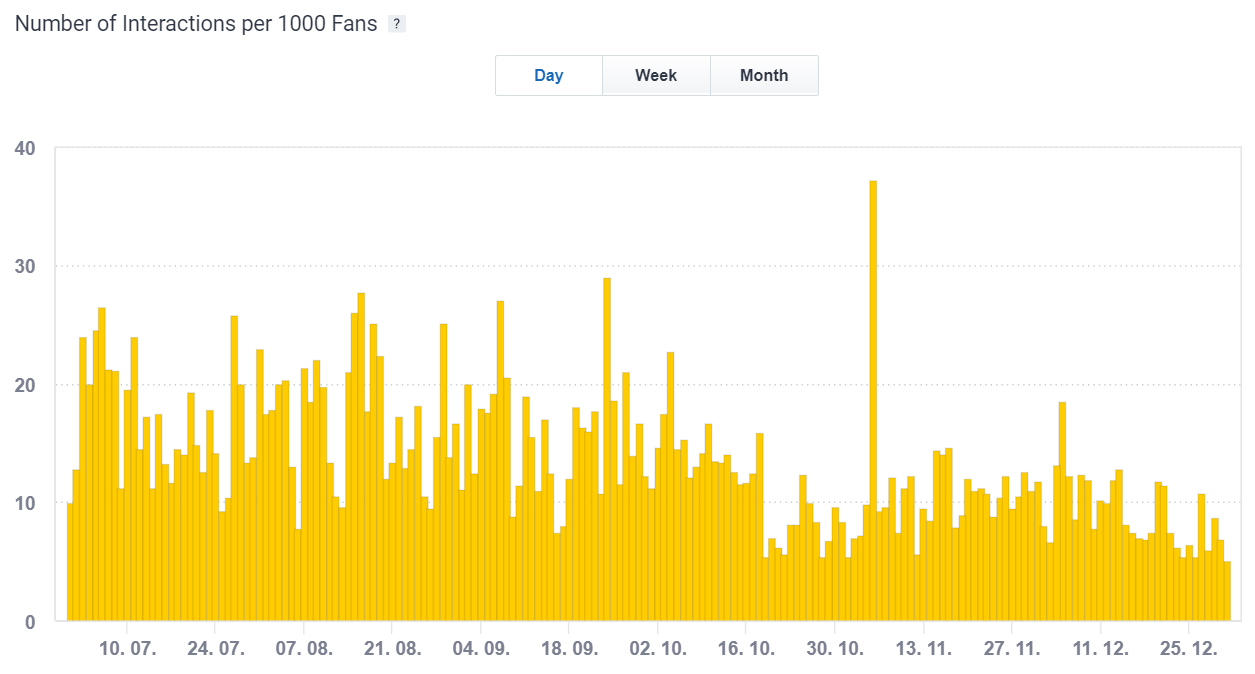

Compare that to interactions for mainstream Slovakian sites over the same period:

Their interactions dipped at the same time — but still haven’t recovered.

— The European Commission announces its panel of experts on fake news and online misinformation. Among the 39 members: First Draft’s Claire Wardle, Reuters Institute’s Rasmus Kleis Nielsen, and International Fact Checking Network/Poynter’s Alexios Mantzarlis.

Nielsen is putting together an “open bibliography of relevant, evidence-based research on problems of misinformation,” which you can see and contribute to here. For now, the broadest research comes from the U.S., and he hasn’t been able to find information on the scope of the problem in the EU: “How widespread a problem is ‘fake news’ in EU member states? It seems that we simply do not know.“

Can it really be true that there is not a single, publicly-available, evidence-based study measuring overall scale & scope of "fake news" in a EU country? The open bibliography on misinformation has grown to 10 pages already but no studies on reach/volume? https://t.co/yNnycrH8Ju

— Rasmus Kleis Nielsen (@rasmus_kleis) January 17, 2018

Much valuable research, and interesting case studies. But nothing akin to @andyguess et al “Selective Exposure to Misinformation: Evidence from the Consumption of Fake News during the 2016 US Campaign.” https://t.co/ohVzrm8rA6 or other attempts to measure overall reach+volume.

— Rasmus Kleis Nielsen (@rasmus_kleis) January 17, 2018

This article first appeared on Nieman Lab. It has been condensed and republished on IJNet with permission.

Main image CC-licensed by Flickr via The Public Domain Review.