Do liberals and conservatives react differently to disinformation? How many political conversations are actually happening online? Does disinformation provided by a bot have different effects on us than disinformation provided by a real person? What is being online actually, you know, doing to us?

A report released this week by the philanthropic Hewlett Foundation, “Social media, political polarization, and political disinformation: A review of the scientific literature,” aims to lay out what we do and don’t know about the relationship between those topics. The report is especially useful as a sort of catalog of the questions we still have; it also flags where existing research is in conflict.

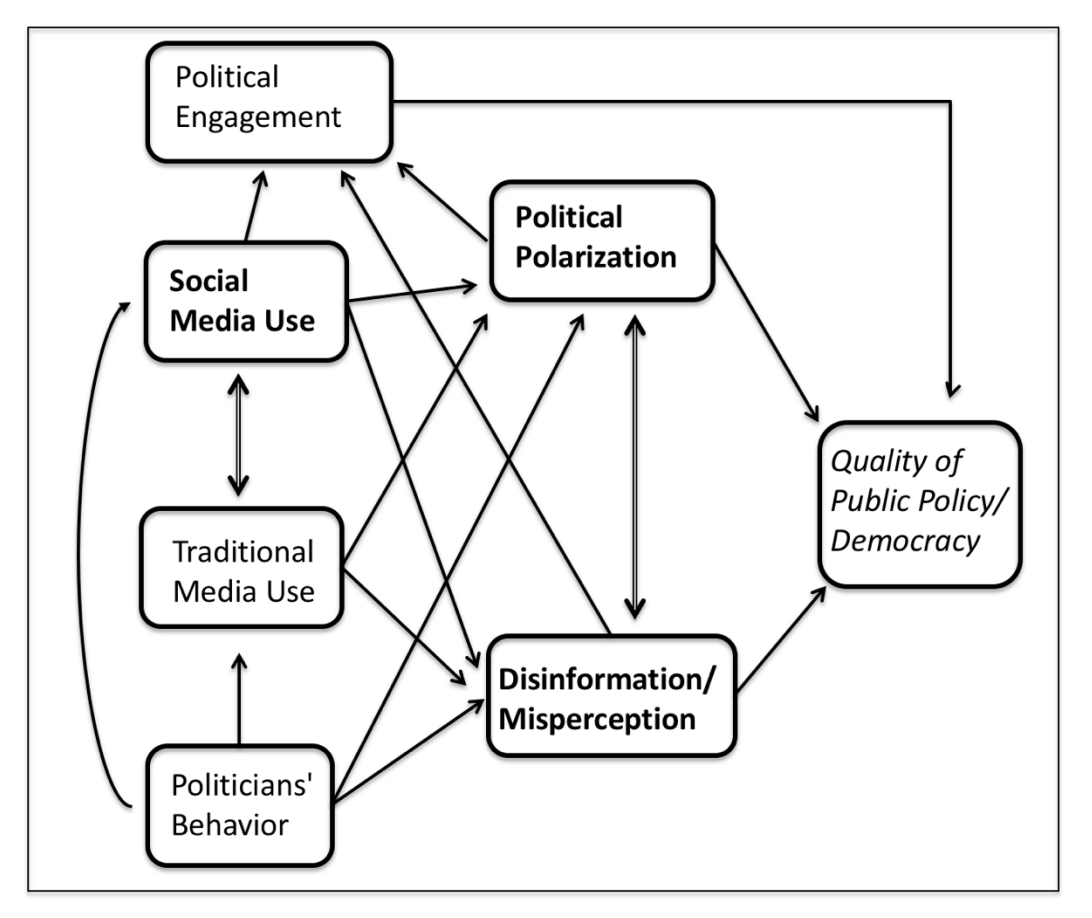

The report, which includes research reviews written by several different academics who specialize in specific areas (lead author Joshua A. Tucker, Andrew Guess, Pablo Barberá, Cristian Vaccari, Alexandra Siegel, Sergey Sanovich, Denis Stukal, and Brendan Nyhan), begins with a chart of all the “moving pieces.” The authors deliberately decided not to link social media use to quality of democracy; social media is neither inherently democratic or undemocratic, they write, “but simply an arena in which political actors — some which may be democratic and some which may be anti-democratic — can contest for power and influence.” (In 2010, the Journal of Democracy featured an article about social media entitled “Liberation technology.” By 2017, the same journal was running “Can democracy survive the Internet?“)

So. Here are some of the things we don’t know, some of which kind of overlap with other things we don’t know.

What’s an “online political conversation”? What’s an “echo chamber”?

Forget just “fake news” and “disinformation” (though we need common definitions for those, too): There’s not even consensus, across scholars, on what an “online political conversation” or an “echo chamber” actually is. Lots of research has tried to focus on the amount of disagreement that people are exposed to online, but we don’t have a standard definition for what kind of conversation counts as “political,” what counts as disagreement, or even for what counts as a conversation in the first place (for instance, what if one side of it is a bot?) And then there’s echo chamber: “To date, not only is there no consensus on what level of selective exposure constitutes an ‘echo chamber,’ there is not even any consensus on what metric or summary statistic should be used to measure this selective exposure.”

Are different online groups exposed to different levels of disinformation? And are their reactions to it different?

Do liberals and conservatives see different amounts of disinformation online, for instance? What about people who are on social media a lot? We need to know more about how different people react to the same things; the authors suggest we could start by studying “whether extremists tend to react differently than moderates,” or whether conservatives react differently than liberals. “If either disinformation or political polarization affect conservatives and liberals differently, then this type of research would seem to be particularly important moving forward.”

How do individuals react when they’re exposed to disinformation?

Some researchers have found a “backlash or boomerang” effect, where people dig their heels in even more strongly on their original position when they’re exposed to information that contradicts it. But if this is actually happening (and some researchers think it isn’t), it’s unclear how much of it is simply “partisan cheerleading” versus legitimate belief.

Various studies about the “extent to which disinformation shared on social media has any effect on citizens’ political beliefs,” or whether it increases polarization, have conflicting findings, partly because the studies use different definitions of what misinformation and polarization are. “Sometimes the differences between rumors, false information, misleading information, and hyperpartisan information are blurry.” Plus, it’s not just about who you vote for: We need more research into how exposure to disinformation affects people’s viewpoints on various issues, their overall interest in politics, and their trust in institutions.

Studies are usually about one platform, but lots of people are on multiple platforms (including offline media).

Most social media research focuses on Twitter; as Jonathan Albright recently pointed out, Twitter’s not where the most people are. Facebook is, but we don’t have a lot of research on how Facebook use affects politics. (Facebook makes itself hard to study!) The report also calls for more analysis of Google search results. And then there’s traditional media: How does it interact with social media? How much are “political rumors from social media” migrating into traditional media stories? What are the social media strategies of hyperpartisan media outlets?

How much does U.S.-based research apply to the rest of the world?

The two-party political system in the U.S. is unusual. It’s also still unclear how “digital media can contribute to polarization and disinformation in more unstable, hybrid regimes and non-democratic regimes.”

The full report, which contains many more things we don’t know (and some we do!) is here.

Main image CC-licensed by Pixabay via TeroVesalainen.

This article was originally published on Nieman Lab. It was edited and republished on IJNet with permission.