Over the past two years, words like “bots”, “botnets”, and “trolls” have entered mainstream conversations about social networks and their impact on democracies. However, malicious social media accounts have often been mislabeled, derailing the conversations about them from substance to definitions.

This post lays out some of the working definitions and methodologies used by DFRLab to identify, expose, and explain disinformation online.

What is a bot?

A bot is an automated social media account run by an algorithm, rather than a real person. In other words, a bot is designed to make posts without human intervention. The Digital Forensic Research Lab (DFRLab) previously provided 12 indicators that help identify a bot. The three key bot indicators are anonymity, high levels of activity, and amplification of particular users, topics, or hashtags.

If an account writes individual posts, and it comments, replies, or otherwise engages with other users’ posts, then the account cannot be classified as a bot.

Bots are predominantly found on Twitter and other social networks that allow users to create multiple accounts.

Is there a way to tell if an account is *not* a bot?

The easiest way to check if an account is not a bot is to look at the tweets they have written themselves. An easy way to do that is by using a simple search function in Twitter’s search bar.

From: handle

If the tweets returned by the search are authentic (i.e. they were not copied from another user), it is highly unlikely that the account in question is a bot.

What is the difference between a troll and a bot?

A troll is a person who intentionally initiates online conflict or offends other users to distract and sow divisions by posting inflammatory or off-topic posts in an online community or a social network. Their goal is to provoke others into an emotional response and derail discussions.

A troll is different from a bot because a troll is a real user, whereas bots are automated. The two types of accounts are mutually exclusive.

Trolling as an activity, however, is not limited to trolls alone. DFRLab has observed trolls use bots to amplify some of their messages. For example, back in August 2017, troll accounts amplified by bots targeted the DFRLab after an article on Charlottesville protests. In this regard, bots can and have been used for the purposes of trolling.

Euler diagram representing the lack of overlap between bot and troll accounts.

What is a botnet?

A botnet is a network of bot accounts managed by the same individual or group. Those who manage botnets, which require original human input prior to deployment, are referred to a as bot herders or shepherds. Bots operate in networks because they are designed to manufacture social media engagement that makes the topic on which the botnet deploys appear more heavily engaged by “real” users than it actually is. On social media platforms, engagement begets more engagement, so a successful botnet puts the topic it is deployed on in front of more real users.

What do botnets do?

The goal of a botnet is to make a hashtag, user, or keyword appear more talked about (positively or negatively) or popular than it really is. Bots target social media algorithms to influence the trending section, which would in turn expose unsuspecting users to conversations amplified by bots.

Botnets rarely target human users and when they do, it is to spam or generally harass them, not to actively attempt to change their opinion or political views.

How to recognize a botnet?

In light of Twitter’s bot purge and enhanced detection methodology, botnet herders have become more careful, making individual bots more difficult to spot. An alternative to individual bot identification is analyzing patterns of large botnets to confirm its individual accounts are bots.

DFRLab has identified six indicators that could help identify a botnet. If you come across a network of accounts that you suspect might be a part of a botnet, pay attention to the following.

When analyzing botnets, it is important to remember that no one indicator is sufficient to conclude that suspicious accounts are a part of a botnet. Such statements should be supported by at the very least three botnet indicators.

1. Pattern of speech

Bots run by an algorithm are programmed to use the same pattern of speech. If you come across several accounts using the exact same pattern of speech, for example tweeting out news articles using the headline as the text of the tweet, it is likely these accounts are run by the same algorithm.

Ahead of the elections in Malaysia, DFRLab found 22,000 bots, all of which were using the exact same pattern of speech. Each bot used two hashtags targeting the opposition coalition and also tagged between 13 and 16 real users to encourage them to get involved in the conversation.

Raw data of the tweets posted by bot accounts ahead of the elections in Malaysia (Source: Sysomos)

2. Identical posts

Another botnet indicator is identical posting. Because most bots are very simple computer programs, they are incapable of producing authentic content. As a result, most bots tweet out identical posts.

DFRLab’s analysis of a Twitter campaign urging to cancel an Islamophobic cartoon contest in the Netherlands revealed dozens of accounts posting identical tweets.

Although individual accounts were too new to have clear bot indicators, their group behavior revealed them as likely part of the same botnet.

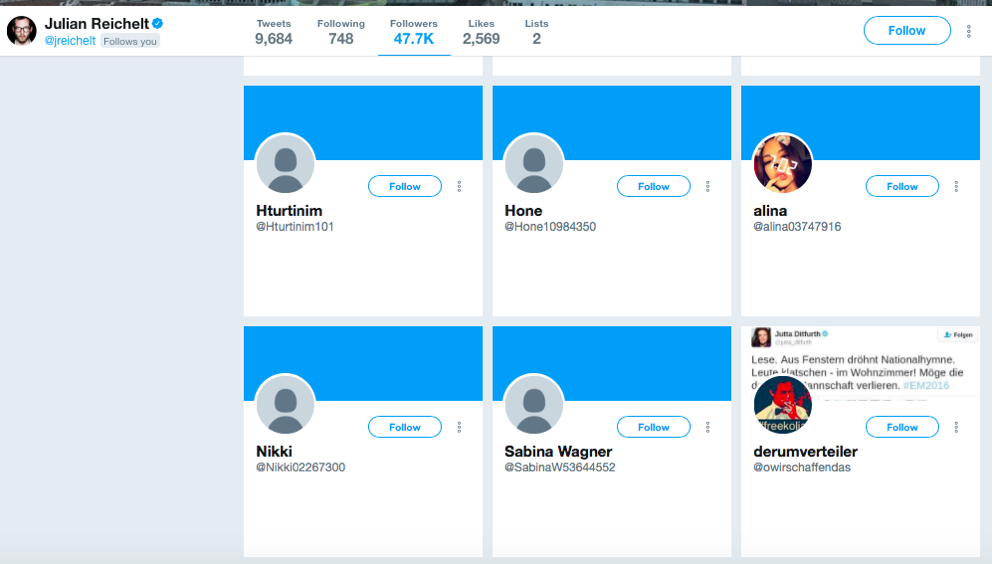

3. Handle patterns

Another way to identify large botnets is to look at the handle patterns of the suspected accounts. Bot creators often use the same handle pattern when naming their bots.

For example, in January 2018, DFRLab came across a likely botnet, in which each bot had an eight digit number at the end of its handle.

(Source: Twitter / @jreichelt / Archived link)

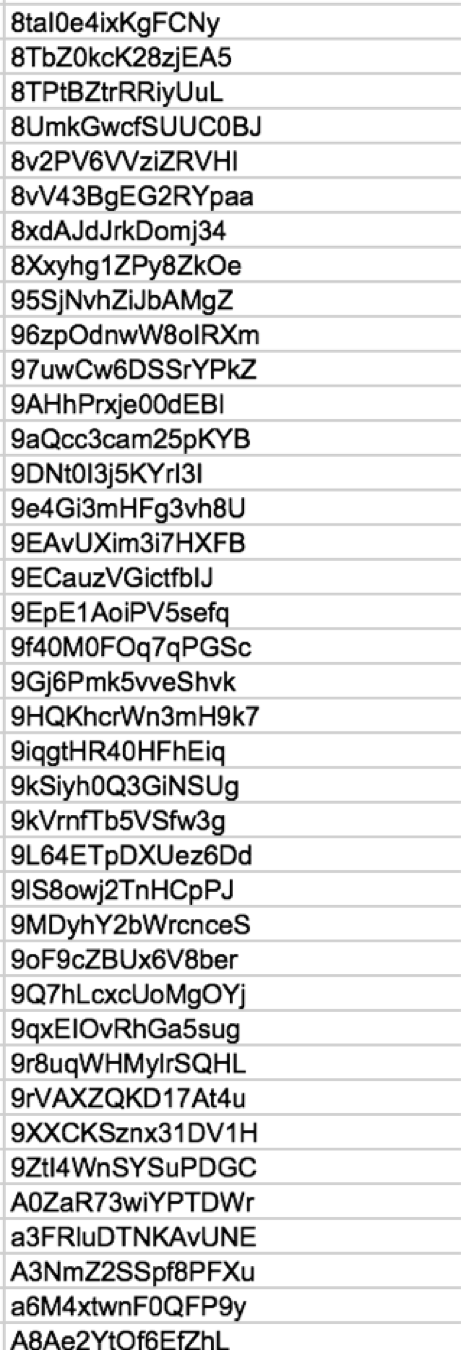

One more tip is systematic alphanumerical handles. The bots from a botnet that DFRLab discovered ahead of the Malaysian elections all used 15-symbol alphanumerical handles.

Screenshot of Twitter handles that used #SayNOtoPH and #KalahkanPakatan hashtags ahead of the Malaysian elections (Source: Sysomos)

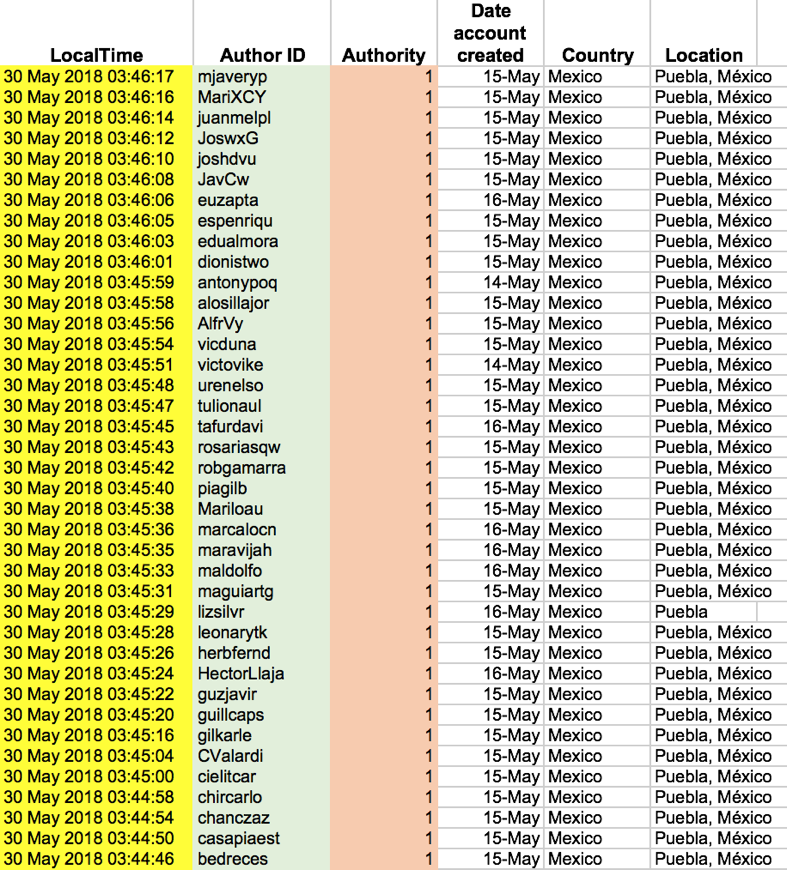

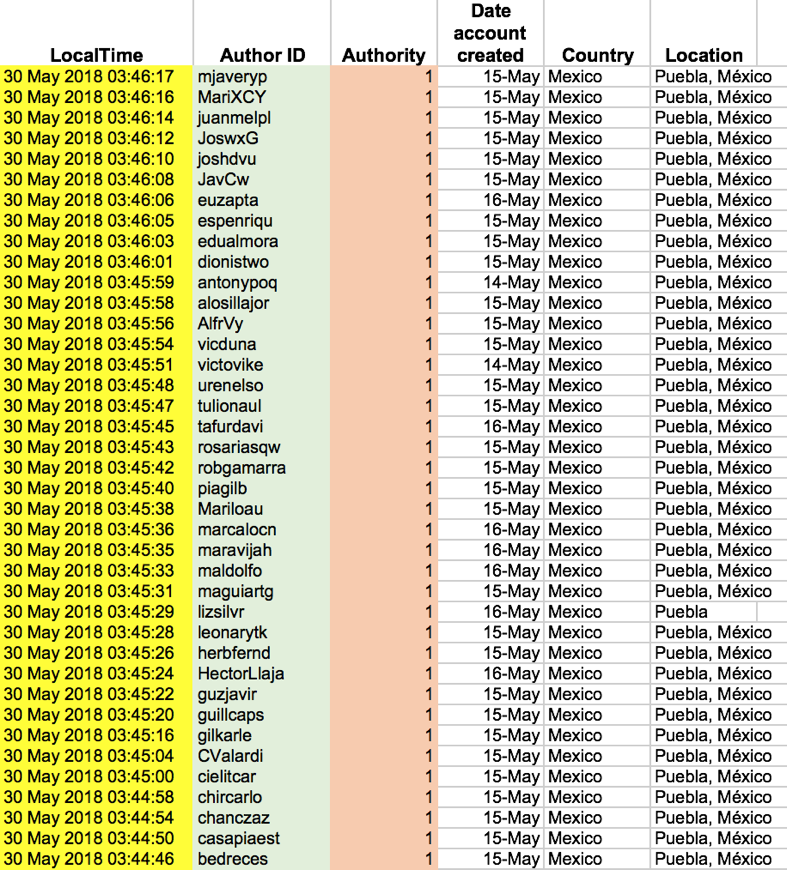

4. Date and time of creation

Bots that belong to the same botnet tend to share a similar date of creation. If you come across dozens of accounts created on the same day or over the course of the same week, it is an indicator that these accounts could be a part of the same botnet.

Raw data showing the date of creation of Twitter accounts that amplified PRI party’s candidates in the state of Puebla (Source: Sysomos)

5. Identical Twitter activity

Another botnet giveaway is identical activity. If multiple accounts perform the exact same tasks or engage in the exact same way on Twitter, they are likely to be a part of the same botnet.

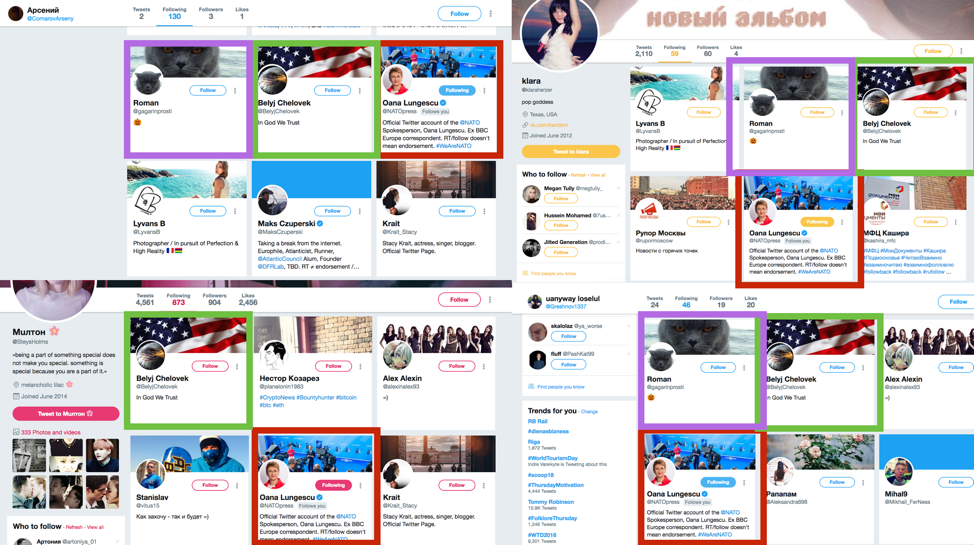

For example, the botnet that targeted the DFRLab back in August 2017, had mass-followed three seemingly unconnected accounts — NATO spokesperson Oana Lungescu, the suspected bot-herder (@belyjchelovek), and an account with a cat as its profile picture (@gagarinprosti).

Botnet targeting the DFRLab followed the same accounts (Source: Twitter)

Such unique activity, for example following the same unrelated users in a similar order, cannot be a mere coincidence when done by a number of unconnected accounts and, therefore, serves as a strong botnet indicator.

6. Location

A final indicator, which is especially common among political botnets, is one location shared by many suspicious accounts. Political bot herders tend to use the location, where the candidate or the party that they are promoting is running in, to attempt to get their content trend in that particular constituency.

For example, ahead of the elections in Mexico, a botnet promoting two PRI party candidates in the state of Puebla used Puebla as their location.

Raw data showing the location of Twitter bots that amplified PRI party’s candidates in the state of Puebla (Source: Sysomos)

This was likely done to ensure that real Twitter users from Puebla saw the bot-amplified tweets and posts.

Are all bots political bots?

No, the majority of bots are commercial bot accounts, meaning that they are run by groups and individuals who amplify whatever content they are paid to promote. Commercial bots can be hired to promote political content.

Political bots, on the other hand, are created for the sole purpose of amplifying political content of a particular party, candidate, interest group, or viewpoint. DFRLab found several political botnets promoting PRI party’s candidates in the state of Puebla ahead of the Mexican elections.

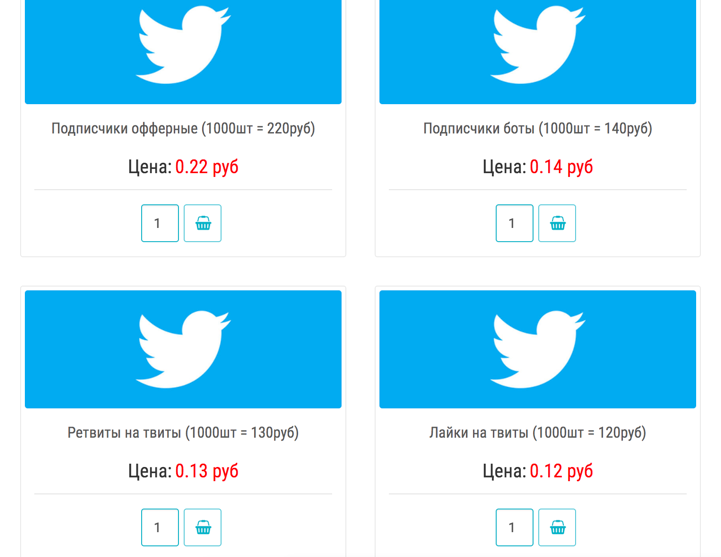

Are all Russian bots affiliated with the Russian state?

No, many botnets that have Russian-sounding/Cyrillic handles or usernames are run by entrepreneurial Russians looking to make a living online. There are many companies and individuals openly selling Twitter, Facebook and YouTube followers/subscribers and engagement, retweets, and shares on the Russian internet. Although their services are very cheap (US$3 for 1,000 followers), a bot herder with 1,000 bots could make more than US$33 per day by following 10 users daily. It means they could make US$900 per month doing just that, which is two times higher than an average salary in Russia.

Translation from Russian: 1,000 followers for RUB 220 (US$3.34), 1,000 retweets for RUB 130 (US$1.97), 1,000 likes for RUB 120 (US$1.82) (Source: Doctosmm.com)

For example, DFRLab observed commercial Russian botnets amplifying political content worldwide. Ahead of the elections in Mexico, for example, we found a botnet amplifying the Green Party in Mexico. These bots, however, were not political and amplified a variety of different accounts ranging from a Japanese tourism mascot to the CEO of an insurance agency.

Conclusion

Bots, botnets, and trolls are easy to tell apart and identify with the right methodology and tools. The one key thing to remember, however, is that account cannot be considered a bot or a troll, until meticulously proven otherwise.

This post was originally published by the Atlantic Council's Digital Forensic Research Lab (DFRLab). It was republished on IJNet with permission.

Donara Barojan is a Digital Forensic Research Associate at DFRLab.

Main image CC-licensed by Pixabay via mohamed_hassan.