Artificial Intelligence (AI) is transforming journalism worldwide, but much of the conversation about its impact has been dominated by perspectives from the Global North.

A new report from the Thomson Reuters Foundation (TRF), based on findings from a survey of over 200 journalists from more than 70 countries in the Global South and emerging economies, aims to address that.

The study, which I authored, sheds light on how AI is being used, the unique challenges these newsrooms face, and the implications of this for journalists, newsroom leaders, funders and policy makers. Here are some of the key findings:

(1) AI adoption is widespread, but uneven

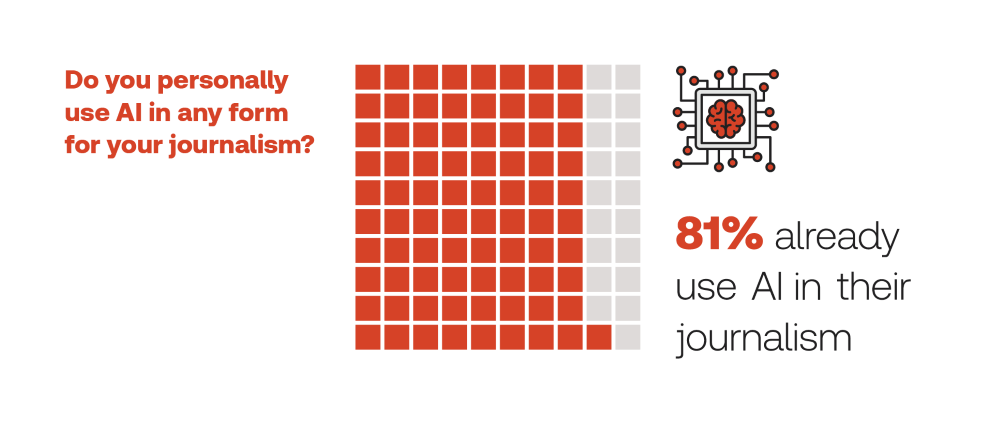

The survey found a “cautious optimism” for AI technologies, a sentiment that perhaps belies the fact that AI adoption across the report’s sample in the Global South is significant. More than eight in 10 (81.7%) of journalists use AI tools in their work.

Almost half of them (49.4%) use AI daily, demonstrating how quickly this has become part of their workflow.

Journalists are primarily using generative AI for drafting and editing content, transcription, fact-checking and research. Tools like ChatGPT, Grammarly, Otter, and Canva have rapidly become essential for many journalists, due to their ability to save time and create efficiencies, while enhancing their ideas and creativity.

As one journalist from Ghana told us, "AI has significantly enhanced my journalism. It has streamlined my research and allowed me to analyze complex data quickly and accurately, particularly in areas like HIV research and environmental reporting."

(2) Barriers to AI adoption: Access, training, and policy gaps

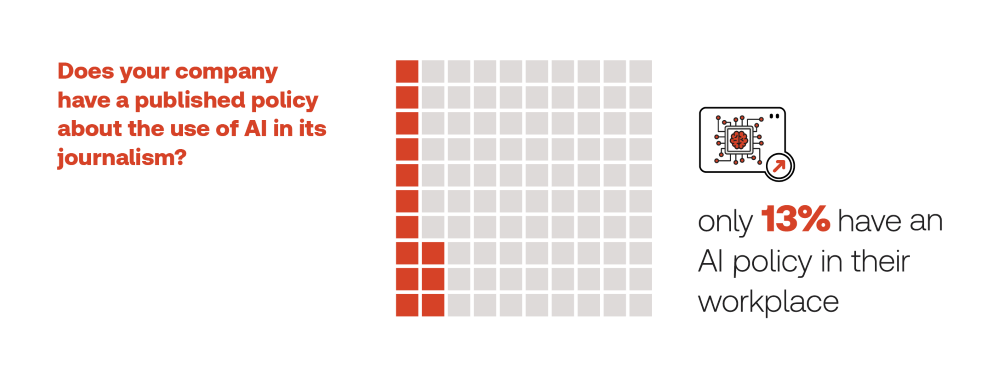

Yet, for all the enthusiasm around AI, the survey also revealed a stark reality: only 13% of respondents reported that their newsroom has a formal AI policy. This lack of structured guidance means that AI use often is left to individual journalists, creating inconsistencies in implementation and raising ethical concerns that journalists are very alive to.

AI adoption also faces other hurdles. Many journalists reported facing barriers such as limited access to AI tools, high costs, and a lack of training, alongside the lack of guidance from their management. For some, particularly those in low-resource newsrooms, AI adoption remains challenging, often due to financial and technological constraints.

One of the most striking findings was that nearly 58% of AI users are self-taught, with little or no formal training from their employers. This offers an opportunity for funders, as many journalists expressed a strong desire for AI-focused workshops, ethical guidelines, and newsroom policies that could help them navigate AI’s potential responsibly.

As a newsroom manager in the United Arab Emirates explained: “Clear ethical frameworks and regulatory guidelines for AI usage in newsrooms are essential to maintain transparency and audience trust, especially around AI-generated content.”

(3) The risks: Misinformation, bias, and job security

While AI has clear benefits, journalists are also wary of its risks. An editor from Pakistan summed this up when they said: “AI has the potential to completely reshape journalism, offering tools that can enhance efficiency and content creation. However, there’s uncertainty about whether this change will be entirely positive.”

Among the top concerns expressed by survey respondents were:

- Misinformation and bias: Nearly half (49%) of respondents worry about AI amplifying false or misleading content, especially given that most AI models are trained on Western-centric datasets.

- Erosion of journalistic skills: Many fear that over-reliance on AI could weaken editorial judgement, original reporting, and creativity. “We should not allow it to take over critical thinking,” said one respondent from Uganda.

- Job displacement: With AI automating tasks like news summaries and content creation, some journalists fear it could lead to job losses, particularly for entry-level positions. A journalist from Kenya shared their concerns, saying: "I fear AI would render a huge majority of journalists jobless due to loss of work to AI and AI-powered systems."

There was also a fear that AI would lead to more generic content, and a lack of distinctiveness. As an editor from Saudi Arabia put: “If everyone uses the same tools in the same way [… this] may cause journalists to lose their distinction and uniqueness.”

(4) The path forward: Ethics, regulation and training

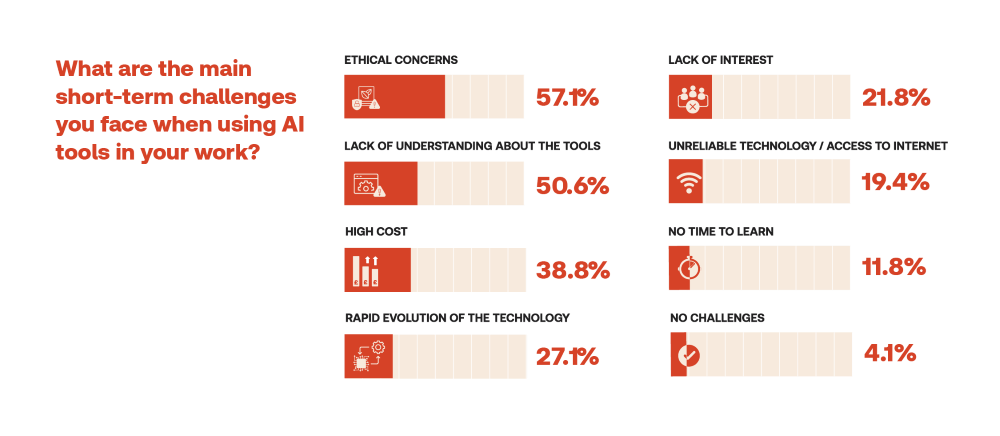

As AI continues to reshape journalism, many survey respondents emphasized the need for industry-wide ethical guidelines and regulatory frameworks. Over half (57.1%) of journalists highlighted ethical concerns as the most pressing short-term challenge that they see in this space.

Some journalists advocate for AI transparency policies, requiring newsrooms to disclose when AI has been used in reporting. Others call for government and industry regulations to address misinformation, data privacy, and fair AI access for newsrooms in developing markets.

“It would be good to see more discussion about ethical issues with the use of AI in the professional community,” said a journalist from Russia. “I am concerned that, in pursuit of audience attention and traffic, publications are neglecting journalistic standards.”

Looking ahead, journalists in the Global South see AI as a tool that could enhance their work — if used responsibly.

Explaining this dichotomy, as one respondent from India noted: “AI can offer significant advantages — speed, data analysis, and efficiency — that could enhance journalism’s reach and accessibility. However, it also presents challenges like misinformation and risks to journalistic integrity, making the overall impact a mixed but cautiously optimistic one.”

Conclusion: A call to action

For AI to truly benefit journalism in the Global South, the report concludes by offering recommendations for journalists, funders, media development organizations and policy makers. This includes activities in five key areas:

(1) Training and skills development: Investing in AI literacy and ethical training for journalists.

(2) Ethical frameworks: Developing guidelines that prioritize transparency and accountability within newsrooms and that these are shared with audiences.

(3) Industry collaboration: Encouraging partnerships between media organizations, developers, and funders to create AI tools suited for diverse journalism needs.

(4) Regulation and policy: Establishing legal safeguards to protect journalism’s role (including our sources) in the AI age; and efforts to ensure that Western and English-language biases in AI systems are actively addressed.

(5) Equitable access: Ensuring that AI benefits are not limited to large, well-resourced newsrooms.

As AI adoption accelerates, journalists, newsrooms and policymakers must work together to ensure that technology strengthens, rather than weakens, the core principles of journalism.

Despite widespread adoption, just 42% of our sample was positive about the future use of AI technologies, suggesting that the jury is still out on what the impact of these tools will be. Our report outlines these concerns and how to address them. It will be fascinating to see if the AI needle shifts in the coming years, and whether journalists in the Global South will continue to see AI as an asset, or as a potential liability and threat, for their work in the future.

Elise Racine & The Bigger Picture / Better Images of AI / Web of Influence I / CC-BY 4.0.